Creating Kinesis Firehose

Create Kinesis Firehose

In this step, we will navigate to the Kinesis Console and create a Kinesis Firehose data stream to receive the data and store it in S3:

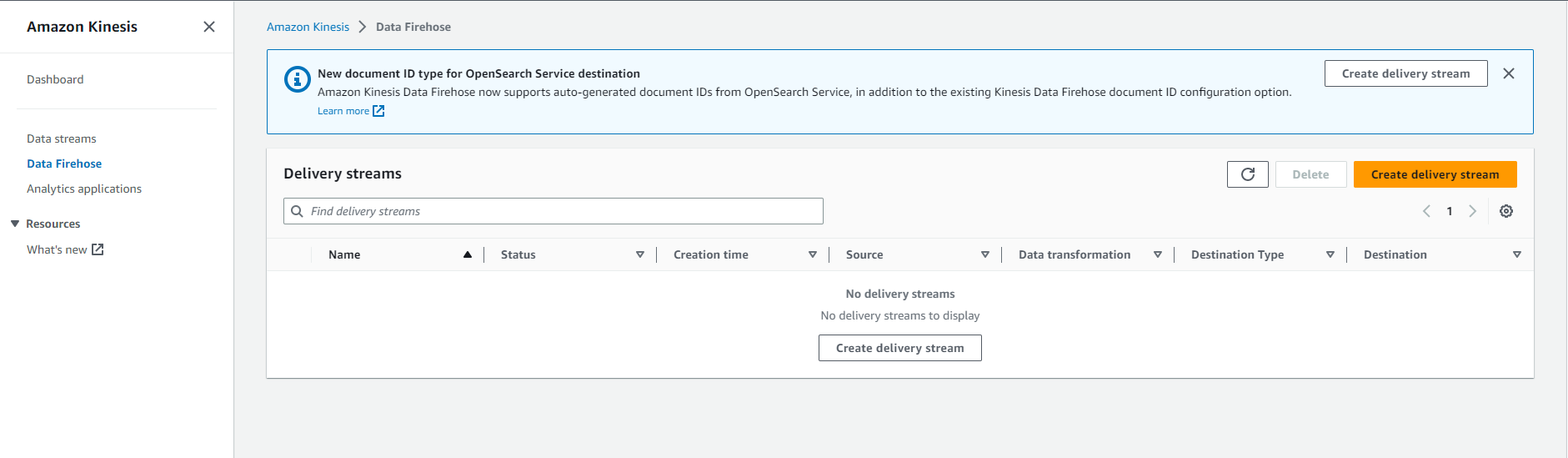

- Go to: Kinesis Firehose Console Click here

- Click on Create delivery stream (Create data stream)

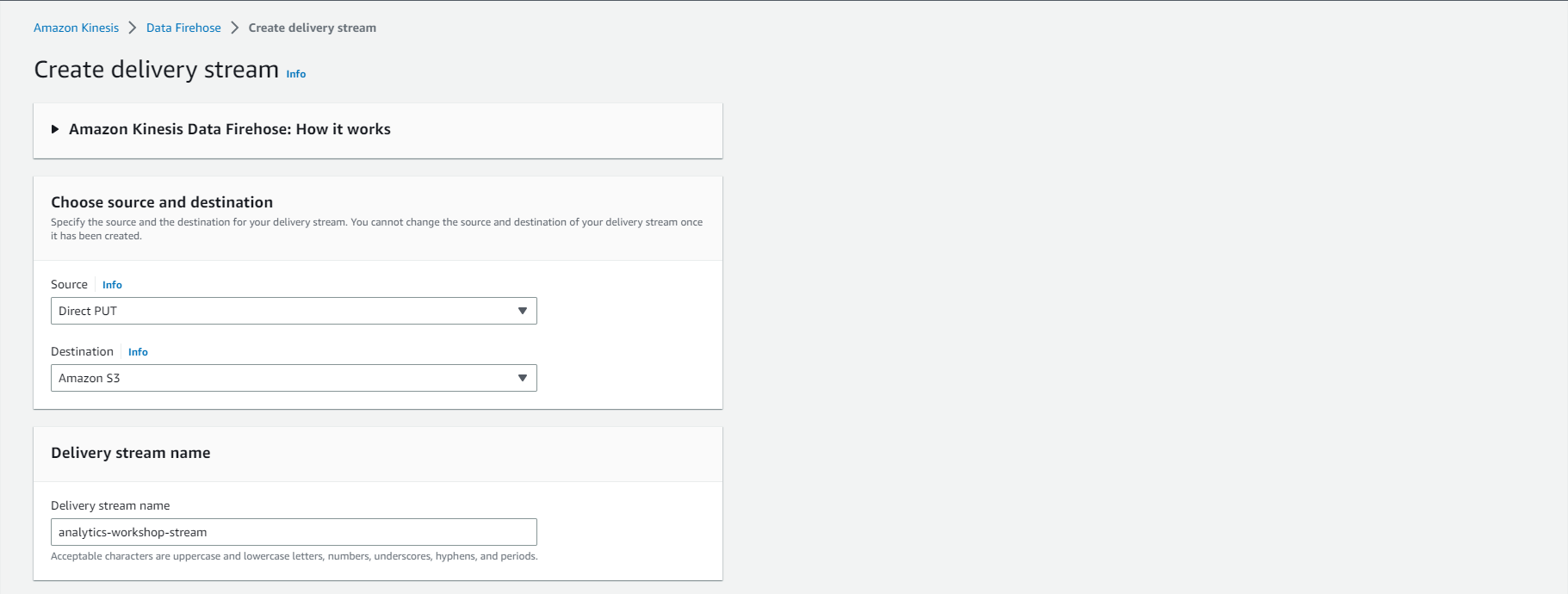

- Select source and destination

- Source: Direct PUT

- Destination: Amazon S3

- Delivery stream name: analytics-workshop-stream

-

Convert and transform records

- Transform source records using AWS Lambda: Disabled (Leave ‘Enable data transformation’ as unchecked)

- Convert RecConvert record format: Disabled (Leave ‘Enable record format conversion’ as unchecked)

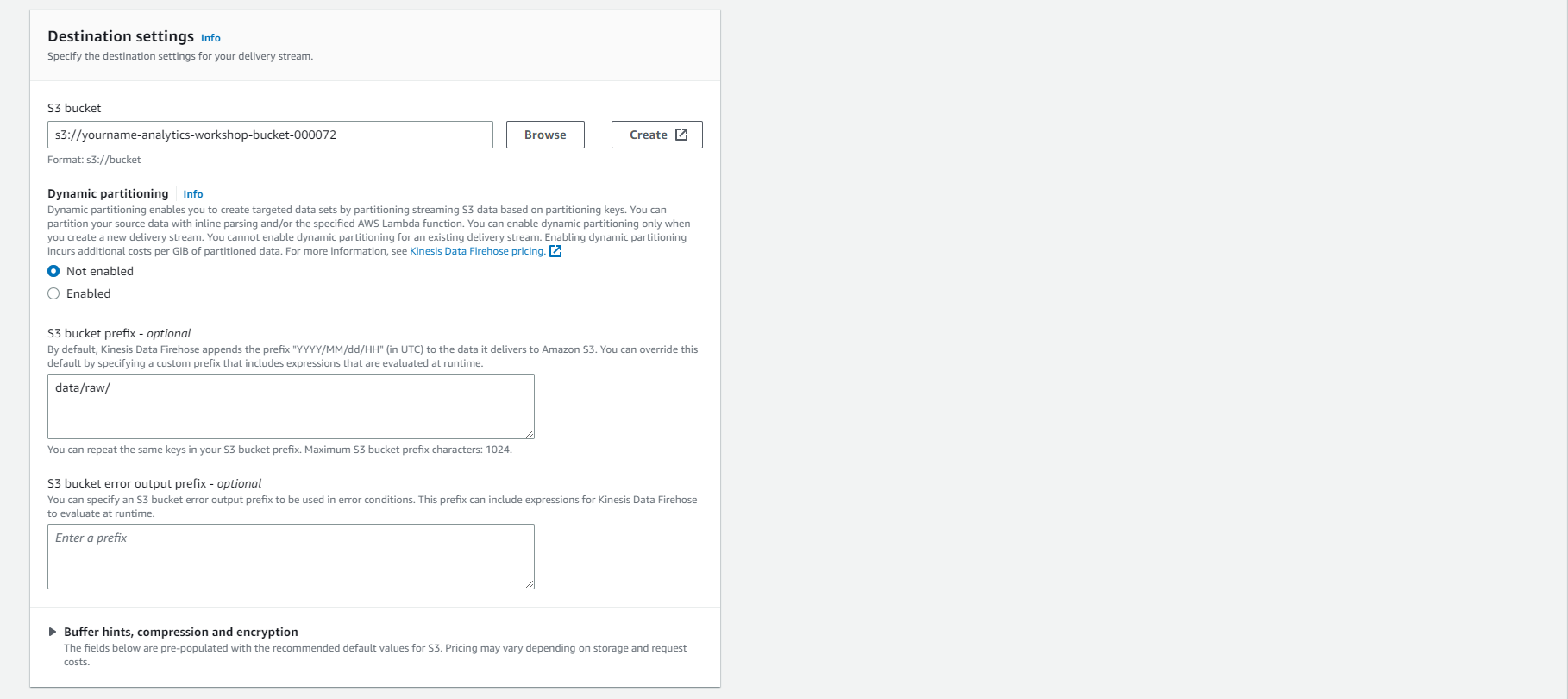

- Destination settings

- S3 bucket: yourname-analytics-workshop-bucket

- Dynamic partitioning: Not Enabled

- S3 bucket prefix: data/raw/ (Note: the slash / after raw is important. If you omit it, Firehose will copy the data to an unexpected location)

- S3 bucket error output prefix: Leave blank

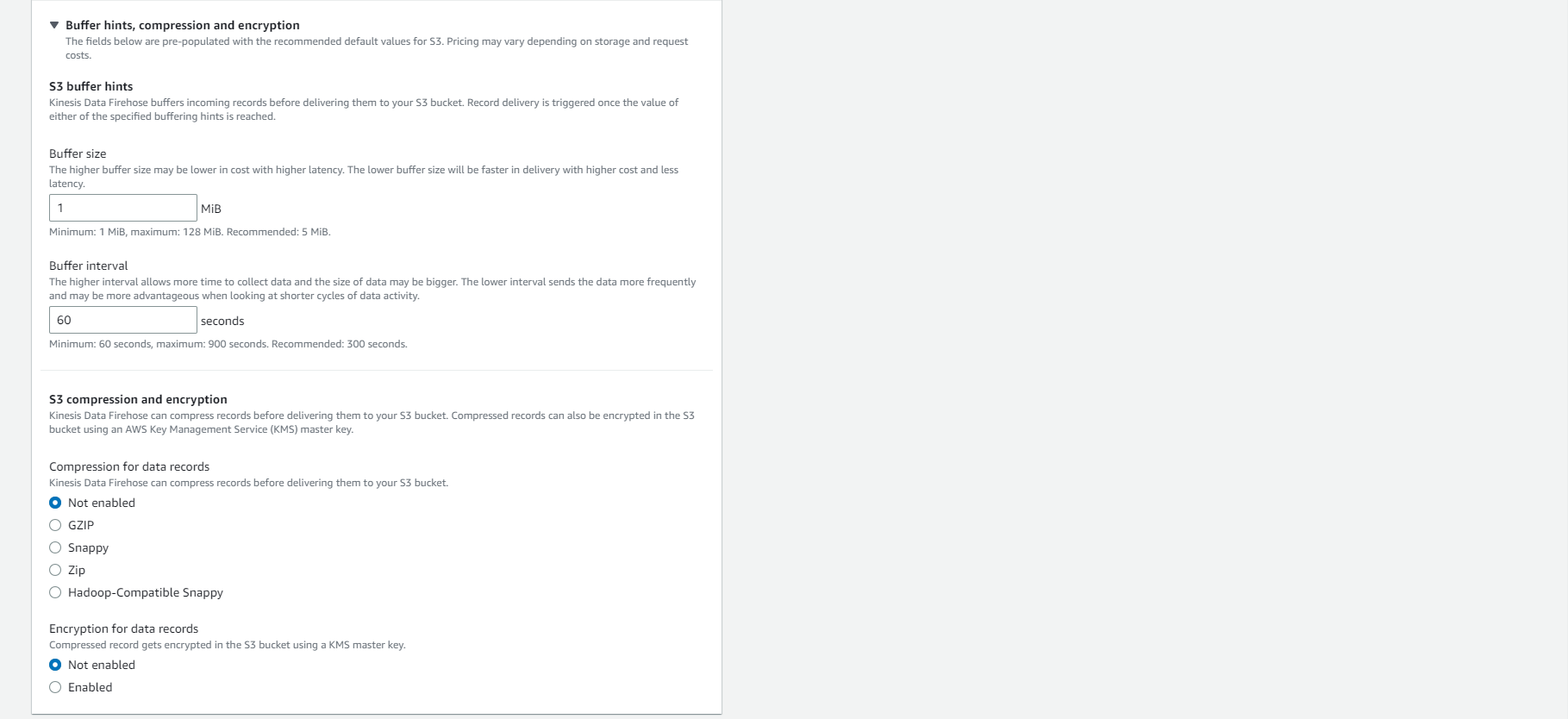

- Expand Buffer hints, compression and encryption

- Buffer size: 1 MiB

- Buffer interval: 60 seconds

- Compression for data records: Not Enabled

- Encryption for data records: Not Enabled

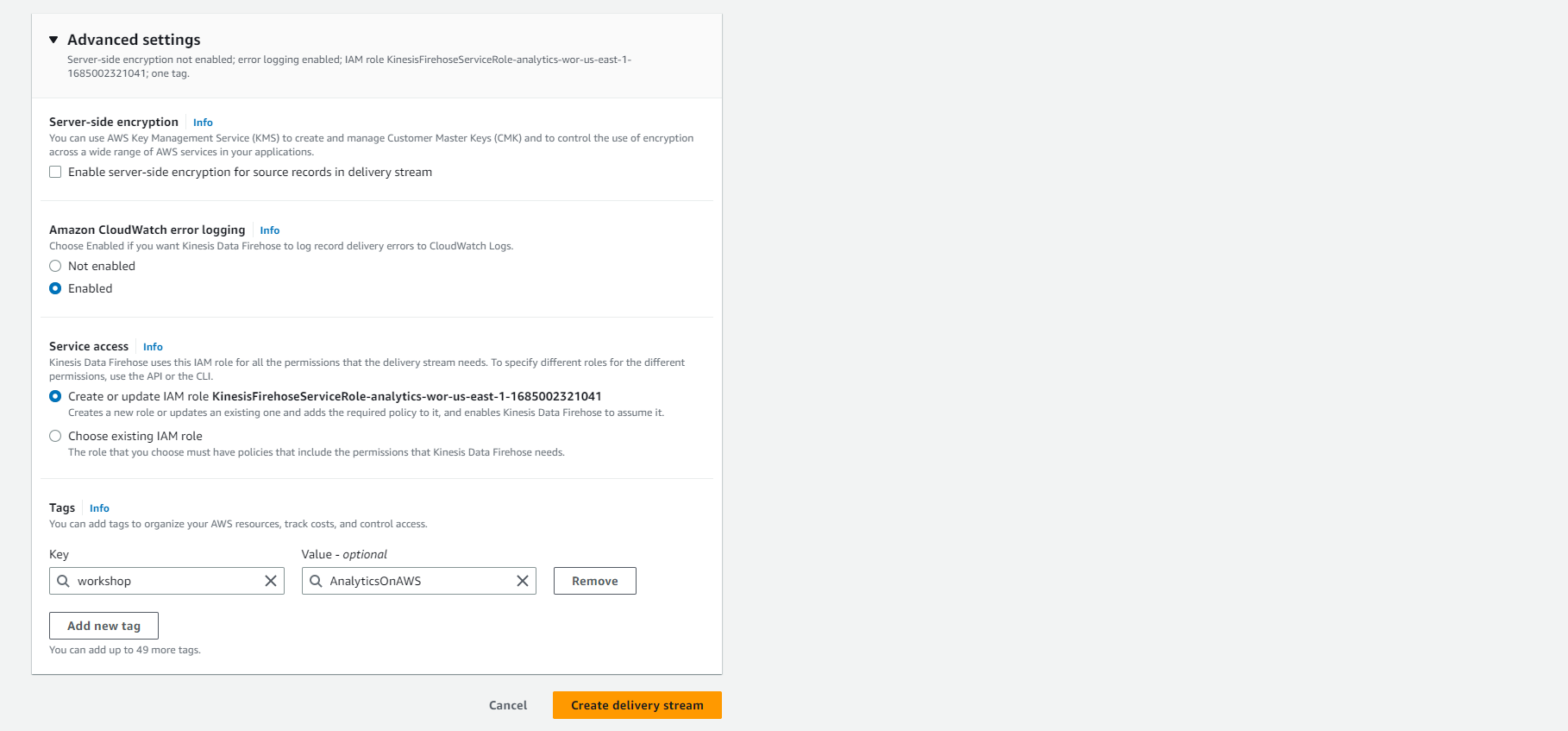

- Advanced settings

- Server-side encryption: unchecked

- Amazon Cloudwatch error logging: Enabled

- Permissions: Create or update IAM role KinesisFirehoseServiceRole-xxxx

- Optionally add Tags, e.g.:

- Key: workshop

- Value: AnalyticsOnAWS

- Review the configuration and make sure it is as mentioned above. Select Create delivery stream

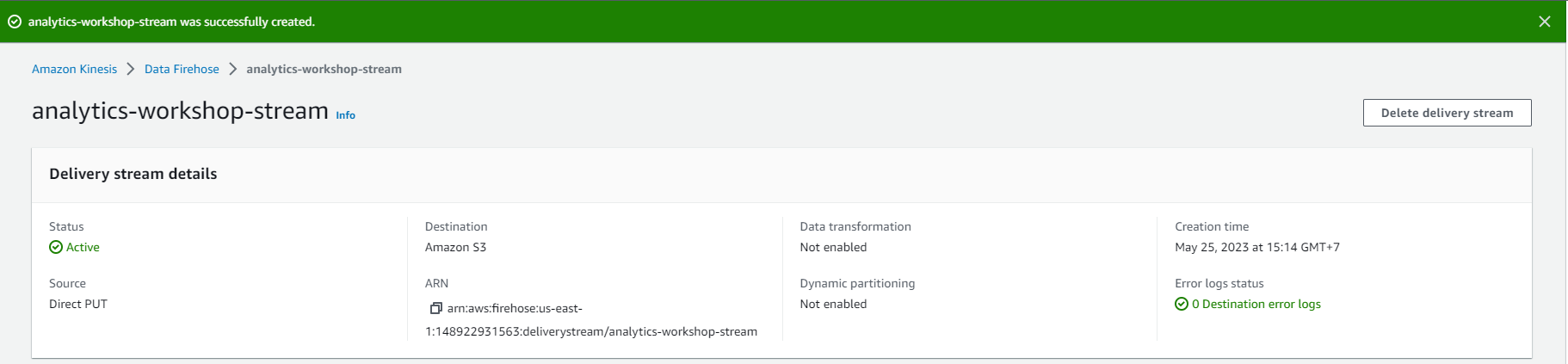

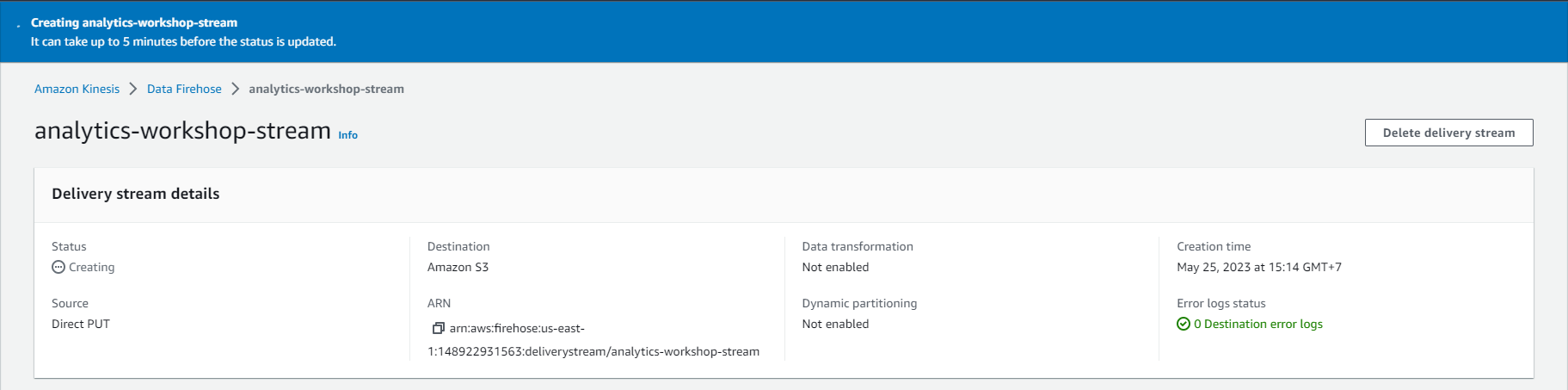

- Create a successful delivery stream.